The Big in Big Data relates to importance not size

28 May 2014In the past couple of years several non-statisticians have asked me “what is Big Data exactly?” or “How big is Big Data?”. My answer has been “I think Big Data is much more about “data” than “big”. I explain below.

|

|

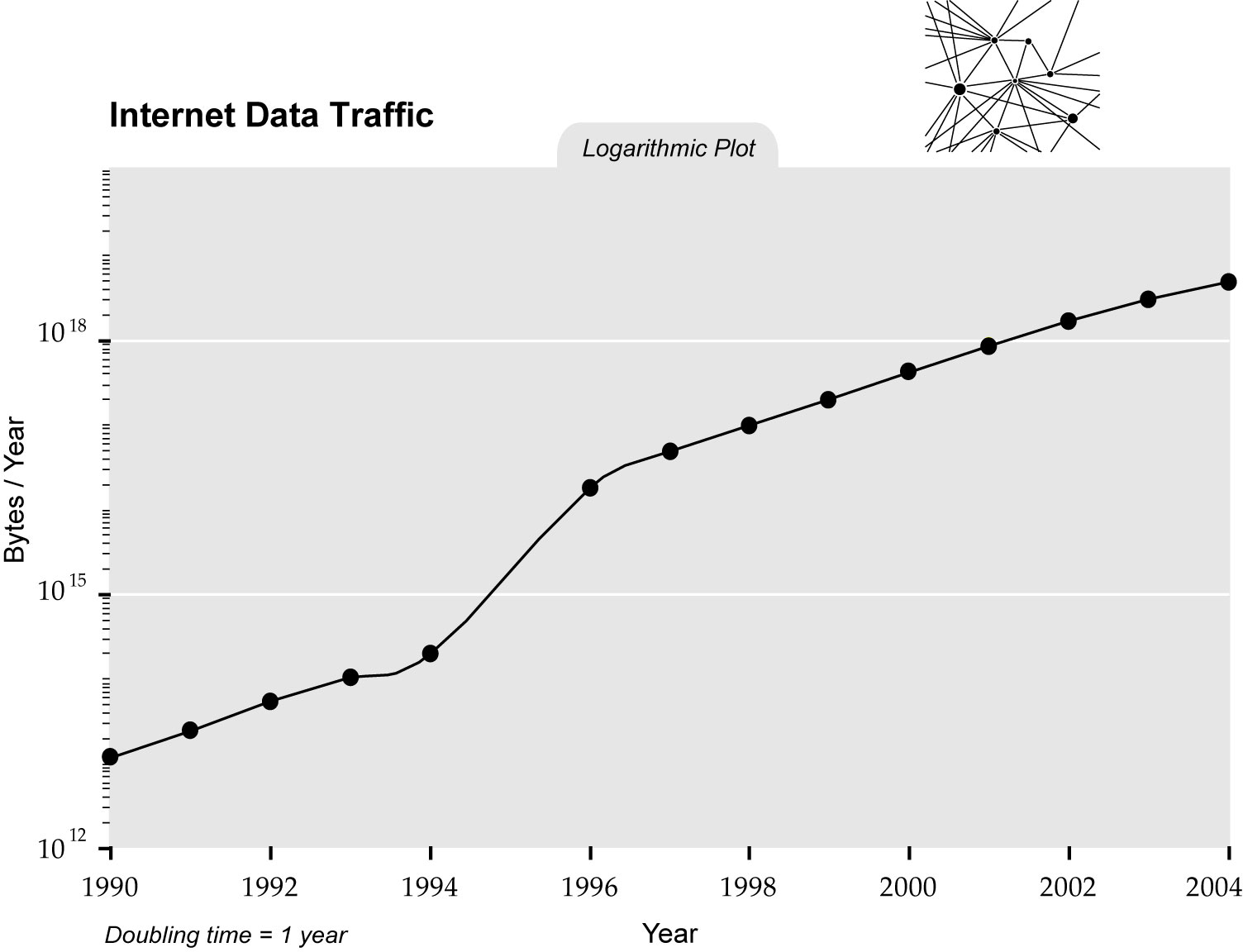

Since 2011 Big Data has been all over the news. The New York Times, The Economist, Science, Nature, etc.. have told us that the Big Data Revolution is upon us (see google trends figure above). But was this really a revolution? What happened to the Massive Data Revolution (see figure above)? For this to be called a revolution, there must be some a drastic change, a discontinuity, or a quantum leap of some kind. So has there been such a discontinuity in the rate of growth of data? Although this may be true for some fields (for example in genomics, next generation sequencing did introduce a discontinuity around 2007), overall, data size seems to have been growing at a steady rate for decades. For example, in the graph below (see this paper for source) note the trend in internet traffic data (which btw dwarfs genomics data). There does seem to be a change of rate but during the 1990s which brings me to my main point.

Although several fields (including Statistics) are having to innovate to keep up with growing data size, I don’t see this as something that new. But I do think that we are in the midst of a Big Data revolution. Although the media only noticed it recently, it started about 30 years ago. The discontinuity is not in the size of data, but in the percent of fields (across academia, industry and government) that use data. At some point in the 1980s with the advent of cheap computers, data were moved from the file cabinet to the disk drive. Then in the 1990s, with the democratization of the internet, these data started to become easy to share. All of the sudden, people could use data to answer questions that were previously answered only by experts, theory or intuition.

In this blog we like to point out examples but let me review a few. Credit card companies started using purchase data to detect fraud. Baseball teams started scraping data and evaluating players without ever seeing them. Financial companies started analyzing stock market data to develop investment strategies. Environmental scientists started to gather and analyze data from air pollution monitors. Molecular biologists started quantifying outcomes of interest into matrices of numbers (as opposed to looking at stains on nylon membranes) to discover new tumor types and develop diagnostics tools. Cities started using crime data to guide policing strategies. Netflix started using costumer ratings to recommend movies. Retail stores started mining bonus card data to deliver targeted advertisements. Note that all the data sets mentioned were tiny in comparison to, for example, sky survey data collected by astronomers. But, I still call this phenomenon Big Data because the percent of people using data was in fact Big.

I borrowed the title of this talk from a very nice presentation by Diego Kuonen

Follow us on twitter

Follow us on twitter