21 Mar 2016

Editor’s note: This post is a guest post by Andrew Jaffe

“How do you get to Carnegie Hall? Practice, practice, practice.” (“The Wit Parade” by E.E. Kenyon on March 13, 1955)

”..an extraordinarily consistent answer in an incredible number of fields … you need to have practiced, to have apprenticed, for 10,000 hours before you get good.” (Malcolm Gladwell, on Outliers)

I have been a data scientist for the last seven or eight years, probably before “data science” existed as a field. I work almost exclusively in the R statistical environment which I first toyed with as a sophomore in college, which ramped up through graduate school. I write all of my code in Notepad++ and make all of my plots with base R graphics, over newer and probably easier approaches, like R Studio, ggplot2, and R Markdown. Every so often, someone will email asking for code used in papers for analysis or plots, and I dig through old folders to track it down. Every time this happens, I come to two realizations: 1) I used to write fairly inefficient and not-so-great code as an early PhD student, and 2) I write a lot of R code.

I think there are some pretty good ways of measuring success and growth as a data scientist – you can count software packages and their user-bases, projects and papers, citations, grants, and promotions. But I wanted to calculate one more metric to add to the list – how much R code have I written in the last 8 years? I have been using the Joint High Performance Computing Exchange (JHPCE) at Johns Hopkins University since I started graduate school, so all of my R code was pretty much all in one place. I therefore decided to spend my Friday night drinking some Guinness and chronicling my journey using R and evolution as a data scientist.

I found all of the .R files across my four main directories on the computing cluster (after copying over my local scripts), and then removed files that came with packages, that belonged to other users, and that resulted from poorly designed simulation and permutation analyses (perm1.R,…,perm100.R) before I learned how to use array jobs, and then extracted the creation date, last modified date, file size, and line count for each R script. Based on this analysis, I have written 3257 R scripts across 13.4 megabytes and 432,753 lines of code (including whitespace and comments) since February 22, 2009.

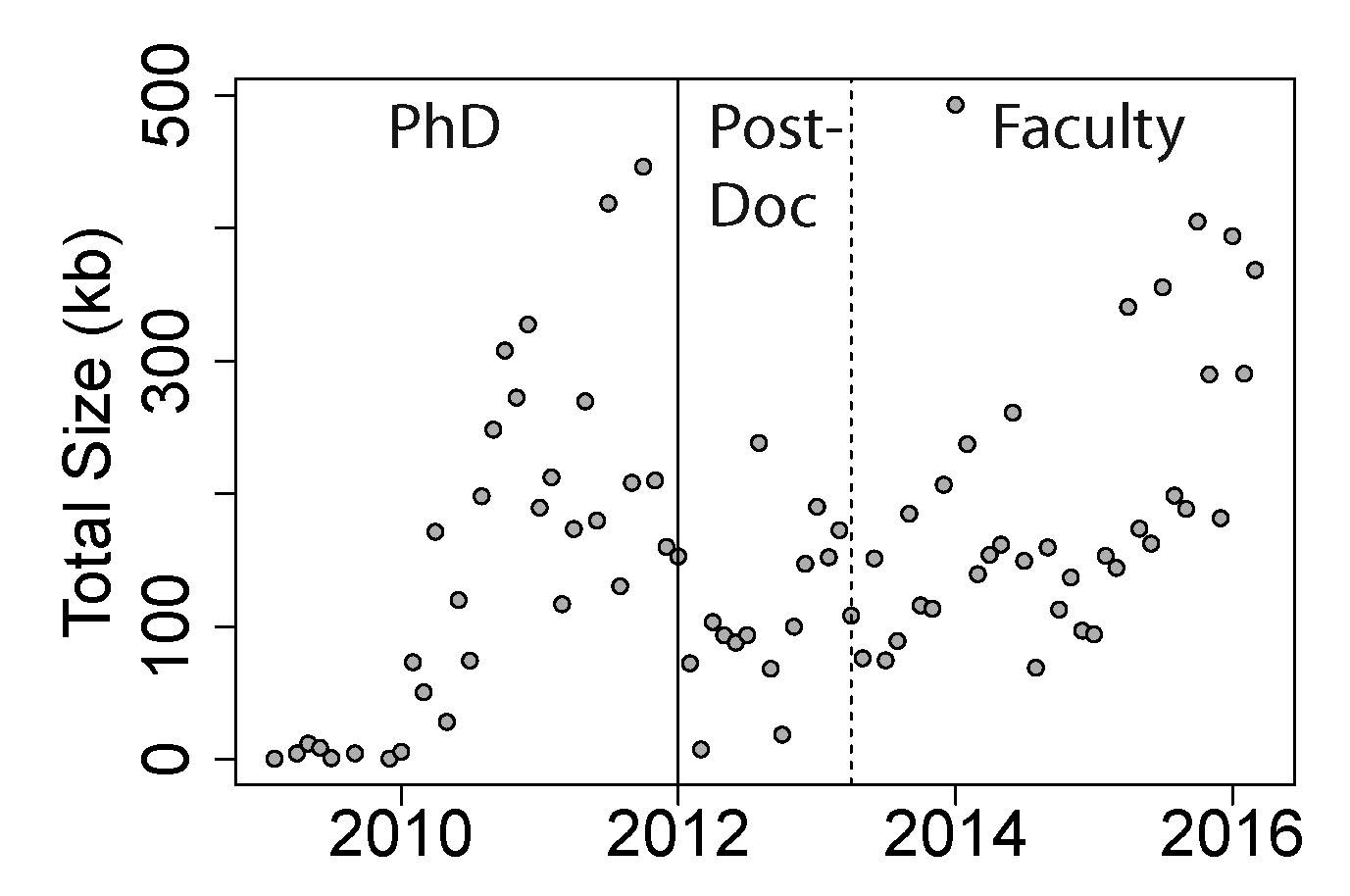

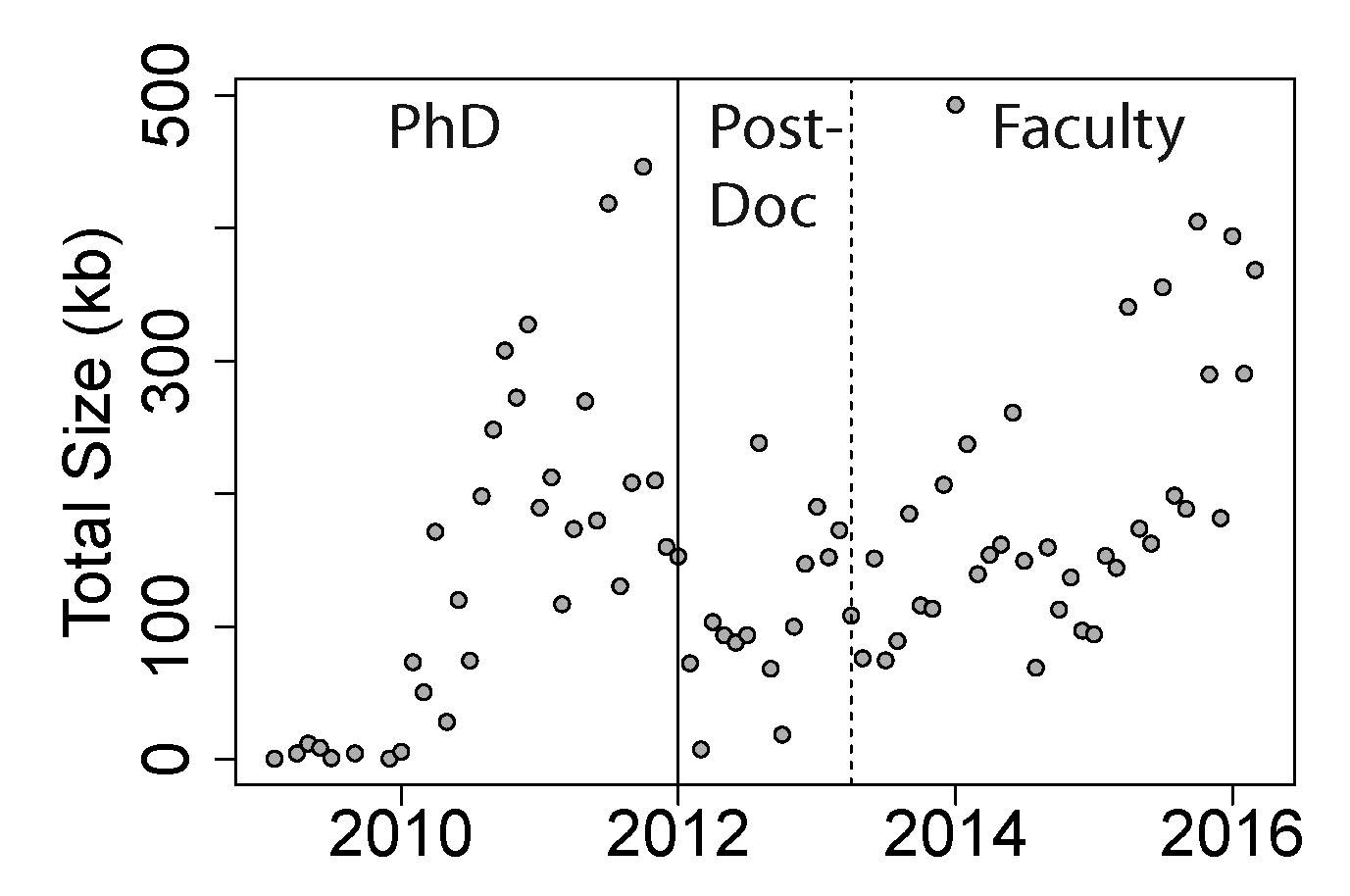

I found that my R coding output has generally increased over time when tabulated by month (number of scripts: p=6.3e-7, size of files: p=3.5x10-9, and number of lines: p=5.0e-9). These metrics of coding – number, size, and lines - also suggest that, on average, I wrote the most code during my PhD (p-value range: 1.7e-4-1.8e-7). Interestingly, the changes in output over time surprisingly consistent across the three phases of my academic career: PhD, postdoc, and faculty (see Figure 1) – you can see the initial dropoff in production during the first one or two months as I transitioned to a postdoc at the Lieber Institute for Brain Development after finishing my PhD. My output rate has dropped slightly as a faculty member as I started working with doctoral students who took over the analyses of some projects (month-by-output interaction p-value: 5.3e-4, 0.002, and 0.03, respectively, for number, size, and lines). The mean coding output – on average, how much code it takes for a single analysis – were also increased over time and slightly decreased at LIBD, although to lesser extents (all p-values were between 0.01-0.05). I was actually surprised that coding output increased – rather than decreased – over time, as any gains in coding efficiency were probably canceled out my often times more modular analyses at LIBD.

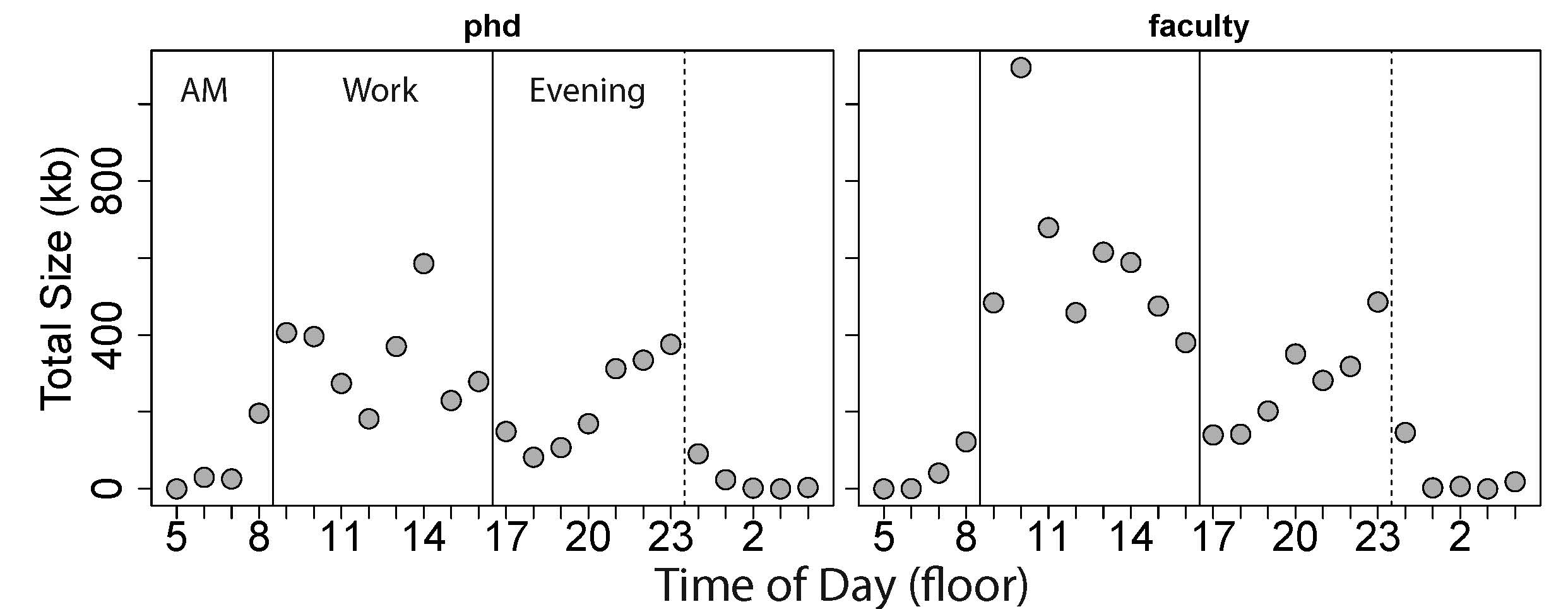

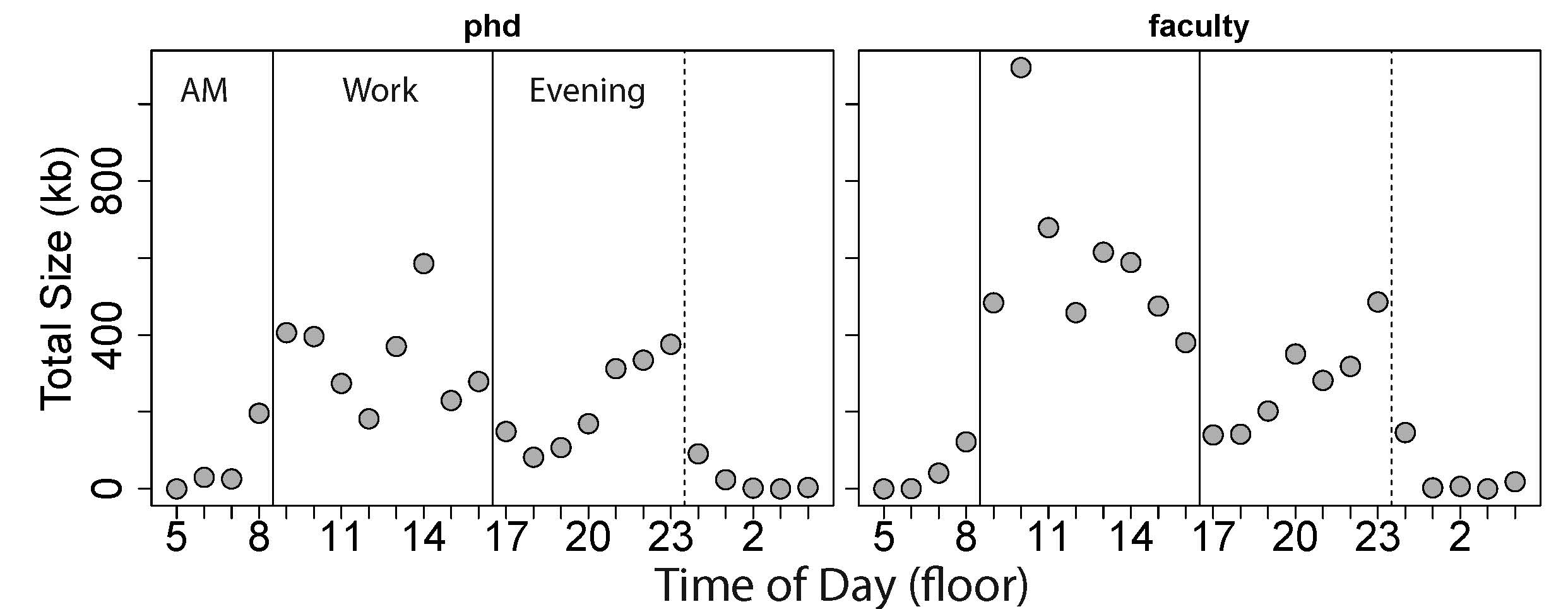

I also looked at coding output by hour of the day to better characterize my working habits – the output per hour is shown stratified by the two eras each about ~3 years (Figure 2). As expected, I never really work much in the morning – very little work get done before 8AM – and little has changed since a second year PhD student. As a faculty member, I have the highest output between 9AM-3PM. The trough between 4PM and 7PM likely corresponds to walking the dog we got three years ago, working out, and cooking (and eating) dinner. The output then increases steadily from 8PM-12AM, where I can work largely uninterrupted from meetings and people dropping by my office, with occasional days (or nights) working until 1AM.

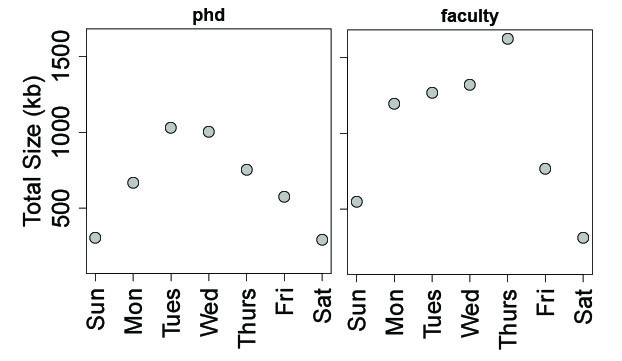

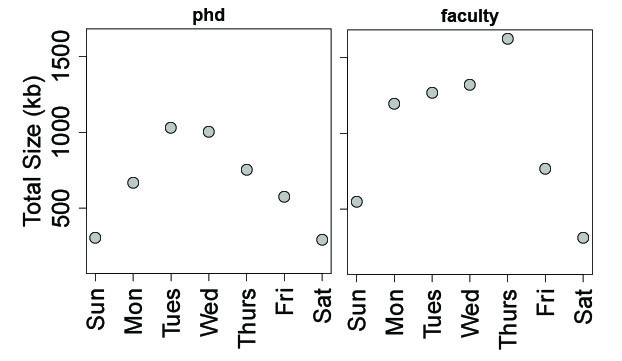

Lastly, I examined R coding output by day of the week. As expected, the lowest output occurred over the weekend, especially on Saturdays. Interestingly, I tended to increase output later in the work week as a faculty member, and also work a little more on Sundays and Mondays, compared to a PhD student.

Looking at the code itself, of the 432,753 lines, 84,343 were newlines (19.5%), 66,900 were lines that were exclusively comments (15.5%), and an additional 6,994 lines contained comments following R code (1.6%). Some of my most used syntax and symbols, as line counts containing at least one symbol, were pretty much as expected (dropping commas and requiring whitespace between characters):

| Code |

Count |

Code |

Count |

| = |

175604 |

== |

5542 |

| # |

48763 |

< |

5039 |

| <- |

16492 |

for(i |

5012 |

| { |

11879 |

& |

4803 |

| } |

11612 |

the |

4734 |

| in |

10587 |

function(x) |

4591 |

| ## |

8508 |

### |

4105 |

| ~ |

6948 |

- |

4034 |

| > |

5621 |

%in% |

3896 |

My code is available on GitHub: https://github.com/andrewejaffe/how-many-lines (after removing file paths and names, as many of the projects are currently unpublished and many files are placed in folders named by collaborator), so feel free to give it a try and see how much R code you’ve written over your career. While there are probably a lot more things to play around with and explore, this was about all the time I could commit to this, given other responsibilities (I’m not on sabbatical like Jeff Leek…). All in all, this was a pretty fun experience and largely reflected, with data, how my R skills and experience have progressed over the years.

14 Mar 2016

We’ve started a Patreon page! Now you can support the podcast directly by going to our page and making a pledge. This will help Hilary and me build the podcast, add new features, and get some better equipment.

Episode 11 is an all craft episode of Not So Standard Deviations, where Hilary and Roger discuss starting and ending a data analysis. What do you do at the very beginning of an analysis? Hilary and Roger talk about some of the things that seem to come up all the time. Also up for discussion is the American Statistical Association’s statement on p values, famous statisticians on Twitter, and evil data scientists on TV. Plus two new things for free advertising.

Subscribe to the podcast on iTunes.

Please leave us a review on iTunes!

Show notes:

Download the audio for this episode.

26 Feb 2016

The current publication system works something like this:

Coupled review and publication

- You write a paper

- You submit it to a journal

- It is peer reviewed privately

- The paper is accepted or rejected

a. If rejected go back to step 2 and start over

b. If accepted it will be published

- If published then people can read it

This system has several major disadvantages that bother scientists. It means

all research appears on a lag (whatever the time in peer review is). It can be

a major lag time if the paper is sent to “top tier journals” and rejected then filters

down to “lower tier” journals before ultimate publication. Another disadvantage

is that there are two options for most people to publish their papers: (a) in closed access journals where

it doesn’t cost anything to publish but then the articles are beyind paywalls and (b)

in open access journals where anyone can read them but it costs money to publish. Especially

for junior scientists or folks without resources, this creates a difficult choice because

they might not be able to afford open access fees.

For a number of years some fields like physics (with the arxiv) and

economics (with NBER) have solved this problem

by decoupling peer review and publication. In these fields the system works like this:

Decoupled review and publication

- You write a paper

- You post a preprint

a. Everyone can read and comment

- You submit it to a journal

- It is peer reviewed privately

- The paper is accepted or rejected

a. If rejected go back to step 2 and start over

b. If accepted it will be published

Lately there has been a growing interest in this same system in molecular and computational biology. I think

this is a really good thing, because it makes it easier to publish papers more quickly and doesn’t cost researchers to publish. That is

why the papers my group publishes all show up on biorxiv or arxiv first.

While I think this decoupling is great, there seems to be a push for this decoupling and at the same time

a move to post publication peer review.

I used to argue pretty strongly for post-publication peer review but Rafa set me

straight and pointed

out that at least with peer review every paper that gets submitted gets evaluated by someone even if the paper

is ultimately rejected.

One of the risks of post publication peer review is that there is no incentive to peer review in the current system. In a paper a

few years ago I actually showed that under an economic model for closed peer review the Nash equilibrium is for no one to peer review at all. We showed in that same paper that under

open peer review there is an increase in the amount of time spent reviewing, but the effect was relatively small. Moreover

the dangers of open peer review are clear (junior people reviewing senior people and being punished for it) while the

benefits (potentially being recognized for insightful reviews) are much hazier. Even the most vocal proponents of

post publication peer review don’t do it that often when given the chance.

The reason is that everyone in academics already have a lot of things they are asked to do. Many review papers either out

of a sense of obligation or because they want to be in the good graces of a particular journal. Without this system in place

there is a strong chance that peer review rates will drop and only a few papers will get reviewed. This will ultimately decrease

the accuracy of science. In our (admittedly contrived/simplified) experiment on peer review accuracy went from 39% to 78% after solutions were reviewed. You might argue that only “important” papers should be peer reviewed but then you are back in the camp of glamour. Say waht you want about glamour journals. They are for sure biased by the names of the people submitting the papers there. But it is possible for someone to get a paper in no matter who they are. If we go to a system where there is no curation through a journal-like mechanism then popularity/twitter followers/etc. will drive readers. I’m not sure that is better than where we are now.

So while I think pre-prints are a great idea I’m still waiting to see a system that beats pre-publication review for maintaining scientific quality (even though it may just be an impossible problem)

Follow us on twitter

Follow us on twitter